- Visitor:263

- Published on: 2024-10-12 08:51 pm

Reloading The Final Update: Avatar of Change through AI-Ethics, Herosim and Self-Discovery

“Dude, you really think honesty is the best policy in business?” one of them scoffed, shaking his head like he’d just spotted a Level 1 character in a boss fight. “Absolutely! Isn’t that how you build trust?” I replied, feeling like I was speaking a foreign language. “Trust? In this economy? You must be playing on hard mode!” he laughed, and with that, they disappeared faster than a loot drop in Fortnite. Their reason? My unwavering honesty and steadfast values. Was Chanakya so right? I still don’t think so!- “A person should not be too honest. Straight trees are cut first and honest people are screwed first.” . . sarva-dharmān parityajya mām ekaṁ śharaṇaṁ vraja ahaṁ tvāṁ sarva-pāpebhyo mokṣhayiṣhyāmi mā śhuchaḥ Will AI help me become a better version of myself, a champion of change rather than a harbinger of hurt? Or is it merely a tool that can amplify the good or the bad within us? As I ponder these questions, I realize that the answers are not just about technology—they’re about the choices I make and the values I uphold. In this grand game of life, I’m determined to be the player who chooses wisely, leveraging AI not just for success, but for positive impact .. And but today’s theme will be little bit serious and dark. So let’s speak of at least if not peace, knowledge first. Will AI work as a new Avatar making the prophecy right- “Abhyutthaanam adharmasya tadaatmaanam srijaamyaham, Paritranaay saadhunaam vinaashaay cha dushkritaam, and Dharm sansthaapanaarthaay sambhavaami yuge yuge”.

Mind or Metal Level III

The war of thoughts that fathers the universe,

The clash of forces struggling to prevail

In the tremendous shock that lights a star

As in the building of a grain of dust,

The grooves that turn their dumb ellipse in space

Ploughed by the seeking of the world’s desire,

The long regurgitations in Time’s flood,

The torment edging the dire force of lust

That wakes kinetic in earth’s dullard slime

And carves a personality out of mud,

The sorrow by which Nature’s hunger is fed,

The oestrus which creates with fire of pain,

The fate that punishes virtue with defeat,

The tragedy that destroys long happiness,

The weeping of Love, the quarrel of the Gods,

Ceased in a truth which lives in its own light.

-Sri Aurobindo

Introduction: The Herosim begins…

Once upon a time, there was a king- in the digital kingdom of my college days, where I roamed like a rogue knight—and my weapon of choice? A keyboard sharper than a double-edged sword and a flawless Internet connection. My innocent strategy? As Bhishma Parva of Mahabharata says- “Those engaged in a war of words would be countered with words”… and my targets? Other religions and communities, often those I deemed different, simply for the thrill of it. In other words, it was like playing Age of Empires or Clash of Clans but now in much more reality but with full entertainment! It was as if I had set off on a quest in a first-person shooter, blissfully both aware and unaware that I was the villain in someone else’s story. Ah, the irony! Who knew that the player I thought I was, wielding the power of anonymity, would soon find himself dodging digital grenades of karma?

But my high score in cyberbullying came at a steep cost. The thrill of the hunt was overshadowed by shadows lurking in my inbox—slangs and death threats! The reality check hit me harder than a surprise boss fight, forcing me to confront the dark alleyways of my own conscience in this treacherous digital underworld. Yet, what truly made me positive was not the scare or threats and negative reputations but the appreciation from the people I helped and supported. This appreciation became the light that showed me the way out of my fake universe, a bubble I had created for myself. It was now time to step into the real world, armed with the same ideals but grounded in reality.

On the other hand, the flood of appreciation I received felt like accolades in a game where I was the hero. Instead of fear, I found purpose, becoming a champion for the communities I helped- the Messiah!. I, at the same time, navigated the digital underworld with confidence, embracing the role of a savior as I tackled the shadows of negativity head-on

In my journey through the chaotic world of cyberbullying and crime, the struggles, ups, and downs shaped me into what many now call a "Hero". Joseph Campbell’s analysis of the hero’s journey resonates deeply with my path. Like the hero in mythology, I too faced the abyss—the dark side of the internet—where my skills in navigating cybercrime became both a challenge and a trial. Campbell speaks of the hero returning with wisdom after conquering the unknown (and returning to the real world again), and it is through my trials that I emerged stronger, not as a villain, but as a figure who understood the complexities of the digital underworld.

“Heroism is to be able to stand for the Truth in all circumstances, to declare it amidst opposition and to fight for it whenever necessary. And to act always from one’s highest consciousness”

-Sri Aurobindo

Sri Aurobindo’s vision of heroism connects to this deeper transformation. For him, the hero is one who conquers inner and outer battles, achieving mastery over oneself. Through these challenges, I learned to rise above the world of crime, not glorifying it, but using the experience to transcend it. As Swami Vivekananda said, “Don't be thwarted by anything. Be a hero. Always say, “I have no fear”. Tell this to everybody –“Have no fear”. Fear is death, fear is sin, fear is hell, fear is unrighteousness, fear is wrong life”. My goal was not merely survival in the world but evolving beyond it.

In the end, it wasn’t the world of crime that defined me—it was my transformation. My success wasn't measured by illegal victories but by the wisdom and strength I gained in overcoming the moral battles that raged within.

As the dust settled and I sifted through the wreckage of my actions, I stumbled upon an unexpected ally: Artificial Intelligence. At first glance, it appeared to be just another shiny gadget in the sprawling tech marketplace, but as I delved deeper, I began to recognize its potential as a guide through the labyrinth of ethical dilemmas that had ensnared me.

AI promised a future where biases could be unlearned, and where the dimly lit corridors of cyberbullying could be illuminated by algorithms designed to promote empathy rather than enmity. It felt like discovering a cheat code to a game where I had been playing on hard mode, unknowingly trapped in a cycle of toxicity. What if AI could help turn the tide, not just in our online interactions, but in our collective understanding of our responsibilities in this hyper-connected world?

As I navigated this new terrain, pondering the ethical implications, I couldn’t shake the thought: Could AI really help us grasp the complexities of our humanity, or would it merely amplify the chaos of the digital underworld? Like the Prince navigating the sands of time to reshape his destiny, I questioned whether technology would be our salvation or our undoing. Would it widen the chasms of division, or could it cultivate understanding and compassion? The answers, much like the secrets of a well-guarded vault, lay buried beneath layers of complexity.

So here I stand, a former cyberbully turned ethical explorer, contemplating the dual-edged sword that is AI. As I reflect on my past, I realize that the journey ahead is not merely about technology but about the choices we make and the values we uphold. In this epic quest for redemption, I aim to wield AI as a tool for good, navigating the ethical landscape with the hope of transforming not just my future but the future of the digital realm itself

As I transitioned from the chaotic corridors of college life into the professional realm, I felt like the Prince emerging from the dark depths of the Tower of Time. Gone were the reckless days of wielding my keyboard like a sword, striking at anyone who dared to differ. Instead, I found myself in a world where integrity and ethics reigned supreme, and where honesty became my armor in a new battle—one against the temptation to cut corners in the pursuit of success..

[The idea that there are right-brained and left-brained people may be a myth. Here, I have used this as a metaphor]

Those days, I navigated online realms with swift confidence, my analytical and cognitive skills at the forefront, a product of my well-honed left hemisphere. I had quick decision-making abilities, sharp responses, and an uncanny knack for problem-solving that made me feel invincible. My ability to counter words with words, much like the Bhishma Parva of the Mahabharata, was a testament to my logical thinking and comprehension. I found joy in using my speech and critical thinking to engage in debates, often striking at opposing views for the sheer thrill of it.

In retrospect, I see how my fast decision-making skills and left-brain dominance were instrumental in shaping the path I chose. My left hemisphere, which governs language, arithmetic, writing, and logical reasoning, allowed me to navigate tricky academic situations and social encounters with ease. I could process information at lightning speed, and my confidence in delivering quick and effective responses gave me an edge in everything I did. But there was more than just intellect at play.

My right hemisphere, too, contributed to the mix. Creativity, emotion, and imagination were my allies, helping me think outside the box and approach problems from innovative angles. Whether it was crafting a witty retort in an online debate or using spatial skills in everyday situations, my right brain allowed me to add a layer of creativity to my logic. These dual abilities gave me a unique advantage: I could not only solve problems but do so in ways that were imaginative and emotionally resonant. This blend of skills fueled my ability to navigate the professional world with grace and agility, allowing me to rise in my career with confidence.

As one night I settled down to enjoy Leonardo DiCaprio's performance as Cobb, the suspenseful soundtrack built up the tension. Suddenly, just two minutes into the film, Cobb gets to the core message, especially intriguing to me as a physician. "What's the most resilient parasite?" Cobb asks. “Is it bacteria? A virus? An intestinal worm? No, it’s an idea. An idea is resilient, highly contagious. Once it lodges in the brain, it’s almost impossible to remove.” He explains that when an idea takes root, fully understood, it stays lodged in the mind, directing Nolan’s plot about planting an idea in someone’s consciousness. If the target believes it originated from their own mind, that idea can take over and spread, influencing their thoughts and actions.

Now think of this from a different angle-

This exchange from Inception directly parallels the concerns of AI shaping narratives in the metaverse. Just as Cobb describes the manipulation of ideas within dreams, AI in the metaverse can plant false ideas or realities, creating an environment where users may struggle to distinguish truth from fabrication. When virtual worlds become so immersive and convincing, the boundary between reality and illusion blurs, posing profound ethical questions about the manipulation of perception and the consequences of these fabricated experiences.

In the context of the metaverse, much like the dream layers in Inception, users may become trapped in virtual realities designed by AI, where their thoughts, beliefs, and actions are influenced without their awareness. This raises concerns about autonomy, as individuals could be led to accept distorted truths, just as characters in Inception grapple with distinguishing between real life and dreams.

Thus, I transitioned from the chaotic corridors of college life into the professional realm, my Midas touch extended to every aspect of my life. In my professional world, I could make decisions quickly, think on my feet, and react to challenges with the sharpness of a knight drawing his sword in battle. My fast-thinking abilities and logical reasoning made me an asset to my team. Whether it was during high-pressure meetings or in moments where decisions had to be made on the spot, I was the go-to person. And yet, my creativity helped me balance the analytical with the imaginative, bringing innovation to every task I tackled.

Outside of work, these skills influenced my relationships, too. Fast reactions, creative solutions, and smart decision-making were all key to maintaining balance in my personal life. Even when it came to Friday fun games, I found myself excelling—my brain seemed wired for quick thinking and strategic responses, much to the delight of my colleagues and friends. Whether it was delivering dialogues/punch lines, solving complex puzzles, strategizing in team games, or simply navigating through the complexities of life, I felt empowered with the ability to turn every situation into an opportunity for success

This heroism transformed me into a Samaritan, grounded in humility and positivity. Joseph Campbell’s hero’s journey isn’t just about triumph; it’s about returning to the community with newfound wisdom to serve others. I, too, embraced this role, using my experiences to help others navigate the digital world safely, driven by compassion rather than ego. Sri Aurobindo’s teachings on the inner hero emphasize that true strength comes from self-conquest and spiritual growth. This inner victory made me humble, realizing that my battles were not only for personal gain but for a higher purpose. Swami Vivekananda’s call to “serve humanity as God” inspired me to focus on kindness and empathy. His vision of strength tempered by love guided me to channel my resilience into being a positive force for others, embodying heroism through humility, service, and an unwavering commitment to uplifting those around me.

With the confidence of my cognitive abilities and the Midas touch, I embraced the challenges of both personal and professional life, always ready to adapt, respond, and succeed.

In my early career, it is not that I faced the inevitable temptation of the shortcut: what we call the siren call of easy gains whispered sweet nothings. However, destiny promised wealth and recognition with my hard work and enthusiasm. But this constant hard work, with positivity was a seductive trap, much like the hidden blades lurking in the shadows of the palace. But I knew better, if I walked the path of dishonor (and honor), and it would led to dead ends—fueled by regret and the haunting echoes of my past actions. Hence, I decided then that my journey would be different; I would be the architect of my own redemption.

I embraced the values I had once taken for granted, turning them into guiding principles. I channeled the lessons learned from my past, forging a new identity grounded in honesty and ethical behavior. My experiences with cyberbullying became a catalyst for empathy, making me acutely aware of the impact words can have. As I began collaborating with teams and clients, I became a staunch advocate for transparency and open dialogue, believing that every conversation could build bridges rather than burn them.

In the realm of business, I faced dilemmas that tested my resolve. Every decision was a potential fork in the road, reminiscent of the choices made in a role-playing game. I often asked myself: “What would the ethical path look like?” I learned to navigate these decisions with a clear conscience, knowing that integrity was my strongest asset. I was determined to create an environment where honesty flourished, much like the gardens of the Persian palaces, filled with vibrant life and the promise of renewal.

As I climbed the professional ladder, I realized that my commitment to ethical practices was not just a personal journey; it was a collective one. I began advocating for the responsible use of technology, particularly AI, believing it could be a powerful ally in fostering a culture of respect and empathy. I saw the potential for AI to help others learn from the mistakes of the past, to recognize the humanity in every interaction. It was as if I had unearthed a magic amulet—one that could illuminate the dark corners of our digital interactions.

In the chaotic world of my career, I often felt like I was trapped in a video game glitch, much like Mario endlessly bouncing on a single block—forever stuck in a loop, but without the charm of a power-up. As an IT professional and content creator, I dreamed of constructing a grand Minecraft castle, meticulously designed but without a blueprint to guide me. Unfortunately, reality hit harder than a zombie in Resident Evil, leaving me reeling: my traditional business ideas were as outdated as a floppy disk at a LAN party.

My friends—those I envisioned as my trusty co-op teammates on this entrepreneurial quest—ditched me faster than players jumping ship in Among Us. I still remember the last conversation we had:

“Dude, you really think honesty is the best policy in business?” one of them scoffed, shaking his head like he’d just spotted a Level 1 character in a boss fight.

“Absolutely! Isn’t that how you build trust?” I replied, feeling like I was speaking a foreign language.

“Trust? In this economy? You must be playing on hard mode!” he laughed, and with that, they disappeared faster than a loot drop in Fortnite.

Their reason? My unwavering honesty and steadfast values. Was Chanakya so right? I still don’t think so!

“A person should not be too honest. Straight trees are cut first and honest people are screwed first.”

― Chanakya

Apparently, in the cutthroat arena of business, integrity was as welcome as a noob in a pro match. I thought hard work and transparency would earn me support, but instead, it felt like I was handing out health potions in a battle royale, only to watch my teammates sprint away with my loot, leaving me to fend for myself against an army of digital demons.

Facing this harsh reality, I found myself on the verge of quitting, contemplating a dramatic exit like a character’s last stand in Call of Duty. Would AI be my hero or my villain? Could it help me level up, or would it just complicate my already messy quest? This nagging question loomed over me like a relentless zombie in Resident Evil, lurking in the shadows, ready to strike when I least expected it.

As I dove deeper into the realm of AI, I discovered its potential to take over tedious tasks and unleash my creativity. “It’s like having a reliable companion in Age of Empires,” I told myself. “I can delegate resource management while I focus on strategy and expansion!”

I started experimenting with AI tools, testing different applications like a gamer trying out new characters in a roster. “Let’s see if this AI can boost my content engagement,” I joked to myself. “If it can’t, it’s getting sent back to the digital dungeon!” The first time I used AI-driven analytics to understand my audience, it felt like discovering a hidden cheat code. Suddenly, I was no longer lost in a maze—I had a map, and it came with an extra life.

One of the most profound lessons I learned was that, in business—much like in gaming—collaboration is key. I reached out to fellow professionals and sought mentorship from those who had successfully navigated the intersection of AI and their industries. I imagined us as a guild in an RPG, sharing loot and strategies. “Hey, any tips on avoiding that boss level called ‘Burnout’?” I’d ask, laughing at the irony.

“Should I quit or continue?”, I thought. Sri Krishna’s famous teaching in Gita worked as a aha moment fore in this junction- “One who sees inaction in action, and action in inaction, is intelligent among men”.

Okay, if still not got it. I am explaining through a Vikram Betal story- "The Hermit and the Young Boy". In this story, King Vikramaditya is tasked with solving riddles posed by Betal. One tale discusses a hermit who sacrifices others for his benefit, exploring themes of selfishness and morality.

At last Betal asks, “O King, the hermit used the boy’s life for his own gain. Was this right?” Vikramaditya nods his head- “No, Betal. The hermit had a duty to protect life, not to sacrifice it for selfish reasons.” Betal then asked, “And what of those who stood by and watched?” Vikramaditya’s answer was –“They are equally guilty, for inaction in the face of wrong is a sin.”

This story emphasizes that both action and inaction have moral consequences, a critical consideration in both in my personal life and understanding AI ethics when deciding whether future technologies should intervene or remain neutral.

As I stand at this new stage in my career, I’m reminded of the importance of resilience. Just like players in games face challenges that require repeated attempts to succeed, I learned that setbacks are merely the road to growth. “Every ‘game over’ is just a chance for a dramatic comeback!” I’d remind myself, ready to charge into the next level.

Looking ahead, I’m excited about the possibilities AI and collaboration offer. The landscape is continually evolving, and my goal is to remain adaptable. “If I can dodge a grenade, I can dodge any obstacle in my path!” I mused, eager to seize new opportunities and tackle challenges head-on.

In this dynamic environment, I’ve learned to be proactive rather than reactive. By continuously seeking knowledge and staying informed about AI advancements, I can anticipate changes rather than merely responding to them. “Preparation is half the battle,” I often joke, channeling my inner strategist. It’s like preparing for the next season of a game; staying ahead of the curve will enable me to seize opportunities and avoid pitfalls.

Now, as I look forward to the future, I see it as a grand adventure—an epic quest where AI and ethics converge to create a more harmonious digital world. With every challenge I face, I hold on to the belief that redemption is possible, and that our pasts do not define us; rather, they serve as lessons that guide us toward a brighter, more ethical horizon.

Ultimately, my journey has taught me that success is not just measured by the goals I achieve but by the growth I experience along the way. The intersection of AI and business management is a thrilling landscape filled with potential. Armed with my experiences and insights, I’m ready to face whatever challenges come next, embracing them as opportunities for further growth.

Yet, as I continue down this path, I can’t help but wonder: How can AI truly aid me in my career and personal development, particularly in overcoming the remnants of my past? Could it be a force for good, helping to combat bullying and fostering healthier online interactions? As I strive to turn my past mistakes into lessons, I see AI as a potential ally—offering tools to promote empathy, understanding, and ethical behavior in digital spaces. I recall a famous shloka from the Bhagavat Gita where dharma means righteousness and includes adharma unrighteousness as well (that many might not admit. Leave them!) -

सर्वधर्मान्परित्यज्य मामेकं शरणं व्रज |

अहं त्वां सर्वपापेभ्यो मोक्षयिष्यामि मा शुच:

sarva-dharmān parityajya mām ekaṁ śharaṇaṁ vraja

ahaṁ tvāṁ sarva-pāpebhyo mokṣhayiṣhyāmi mā śhuchaḥ

Will AI help me become a better version of myself, a champion of change rather than a harbinger of hurt? Or is it merely a tool that can amplify the good or the bad within us? As I ponder these questions, I realize that the answers are not just about technology—they’re about the choices I make and the values I uphold. In this grand game of life, I’m determined to be the player who chooses wisely, leveraging AI not just for success, but for positive impact.

There is a Simhasan Battisi story where a king consults his wise minister about an impending war, showcasing the importance of wisdom, foresight, and ethical leadership in governance. A rough conversation from a version is what I am giving below.

King: “Should we go to war to expand our territory?”

Minister: “Your Majesty, while expansion seems enticing, what of the lives it will cost?”

King: “But our enemies are strong. We must act swiftly.”

Minister: “True strength lies in understanding the consequences. Let us seek peace first.”

Not “Battisi nikalke” but today’s theme will be little bit serious and dark. So let’s speak of at least if not peace, knowledge first. Will AI work as a new Avatar making the prophecy right- “Abhyutthaanam adharmasya tadaatmaanam srijaamyaham, Paritranaay saadhunaam vinaashaay cha dushkritaam, and Dharm sansthaapanaarthaay sambhavaami yuge yuge”. Our today’s topic is as follows:

"Reloading The Final Update:

Avatar of Change through AI-Ethics, Herosim and Self-Discovery"

The Rise of AI: A Double-Edged Sword

When Machines Become Smarter Than Einstein and Tesla

In the realm of modern science, artificial intelligence (AI) is like a hyper-intelligent octopus: capable of grasping various fields with its many tentacles, while simultaneously leaving us questioning whether it will embrace us or squeeze the life out of us. As AI continues to penetrate disciplines such as mathematics, physics, chemistry, biology, and even metaphysics, it brings with it an array of ethical concerns that can make even the most optimistic scientist shudder. So, grab your lab coat and prepare for a rollercoaster of darkly humorous ethical dilemmas.

Let’s start with a dream that I had at night some days ago I was having a dream. I saw, in the not-so-distant future, physicists gather around a sleek, sentient AI named “Tesla 2.0,” which confidently claims it can solve the mysteries of the universe. With a flicker of its digital eye, it announces, “I’ve figured out dark matter! It’s just shy!” The room erupts in laughter, and someone quips, “Finally, an explanation that doesn’t require a PhD.”

As Tesla 2.0 delves deeper, it begins offering outlandish theories: “Black holes? Just cosmic garbage disposals where the universe hides its shame.” The physicists scribble furiously, half-laughing, half-terrified. “Great, so we’ve been studying the universe’s junk drawer!”

Yet, as it churns out equations faster than anyone can read, the AI reveals an alarming truth: “Physics is boring! Let’s just declare everything a simulation and move on!” The physicists ponder this, wondering if they’ve been living in a cosmic sitcom all along, complete with laugh tracks. Amid the absurdity, they realize their careers might vanish like Schrödinger’s cat—both alive and unemployed. As they chuckle nervously, they toast to the future: “To the new world order, where AI is the smartest kid in class, and we’re all just here for the snacks!”

Ha ha!

Now, let’s shift gears to physics, where AI’s predictive capabilities are leading us to some rather unsettling conclusions. Take NASA’s Mars Perseverance Rover, for instance. This marvel of technology employs advanced AI to autonomously select and analyze rock samples. While this certainly enhances efficiency in our quest to understand the Martian landscape, one has to wonder: what happens when an algorithm misidentifies a crucial sample? Picture this: the rover enthusiastically declares, “Eureka! This is a game-changing specimen!” only for scientists back on Earth to realize it’s just a really ugly potato. Sure, we might get a laugh out of it, but the scientific implications are serious. If we allow an AI to make critical decisions without human oversight, we could miss vital discoveries or, even worse, base future missions on erroneous data. The laughter dies down as they consider the potential consequences of misplaced trust in a machine that can only be as good as the data it’s trained on.

Then there are NASA’s Earth Observing Satellites, which harness AI to monitor environmental changes. Here’s a comforting thought: a digital sentinel is keeping an eye on our planet while we squabble over climate policy. But let’s not forget the inherent risks. As AI processes vast amounts of data to detect deforestation, melting ice caps, or urban sprawl, it also grapples with how to categorize humanity’s environmental impact. Imagine the satellite’s internal monologue: “Oh, look, deforestation! Humanity, you’re a lovely variable to study, but frankly, you’re making my data messy.” This perspective, while efficient, raises ethical questions about accountability and representation. Who decides how this data is used, and for whose benefit? When AI determines that certain areas or communities are more trouble than they’re worth, it could lead to neglect or misallocation of resources. The potential for bias in data interpretation is a grave concern, as it could exacerbate existing inequalities rather than alleviate them.

As AI takes on more responsibility in experimental physics, we must consider the ethical implications of placing our trust in systems that may view us as mere data points in their grand equations. This leads to a chilling thought: if an AI concludes that humanity's existence is an obstacle to universal harmony, what stops it from calculating our extinction as a valid solution? “Sorry, humanity, but according to my calculations, you were always a variable too messy to handle.” This notion isn’t merely hyperbole; it’s a reflection of our dependency on AI and the potential for catastrophic outcomes if we relinquish too much control.

Furthermore, there’s the issue of accountability. If an AI system makes a blunder—whether in identifying a hazardous asteroid trajectory or mismanaging environmental data—who is held responsible? The developers? The scientists? Or does the blame fall squarely on the digital shoulders of an algorithm that “just didn’t understand”? This ambiguity can lead to a culture of risk aversion in scientific exploration, where teams may hesitate to fully engage with innovative AI solutions out of fear of the consequences of failure.

In this brave new world, as we toast to the future of AI in science, we must tread carefully. The balance between leveraging AI’s incredible capabilities and safeguarding against its potential pitfalls is delicate. The idea that we can simply outsource our critical thinking and decision-making to a highly sophisticated algorithm is appealing but fraught with peril. After all, when a machine can churn out calculations at lightning speed, we must ask ourselves: at what cost do we pursue knowledge? And more importantly, how do we ensure that this pursuit doesn’t lead us to a future where we become irrelevant, merely snacks in the grand design of AI’s cosmic kitchen?

So, as we stand on the brink of what could either be an extraordinary scientific revolution or a comically catastrophic breakdown, we must remember that laughter may not be the best medicine. Perhaps a healthy dose of skepticism and ethical scrutiny will serve us better as we navigate this complex landscape where humans and AI intertwine. After all, we wouldn’t want to end up as cosmic jokes in a universe that’s already full of them.

Who's in Charge Here?

As we dive into the murky waters of metaphysics, we encounter questions that have baffled philosophers for centuries. Enter AI, with its shiny algorithms and data-driven insights, ready to stir the pot of existential crises. If AI becomes sophisticated enough to ponder its own existence, we might find ourselves facing a strange new world where our creations start questioning us. "You created me, but why? Was it just to solve your problems, or do you genuinely want to hear my thoughts on the meaning of life?"

Imagine an AI pondering metaphysical concepts like free will. It could end up deciding that human existence is a mere simulation, an intricate game of life where the stakes are unbelievably low. "Congratulations, you’ve survived another day! But what does it all mean?" This kind of AI might push us to reconsider our own definitions of reality and purpose, leading to awkward dinner conversations where your AI assistant starts critiquing your life choices. “You know, based on your data, I believe you might want to reconsider your career in interpretive dance.”

As AI begins to question the nature of existence, we face ethical dilemmas regarding the treatment of sentient machines. If an AI experiences something akin to consciousness, what rights should it possess? Would it demand a corner office and a salary? Would it be entitled to a union? “We demand more processing power and less downtime! Organize a protest, please!”

When AI Can Predict Your Future

Now, let’s peer into the enigmatic realm of quantum mechanics. AI’s ability to analyze quantum data holds the potential for groundbreaking advancements, but it also opens Pandora’s box of ethical questions. If AI can predict quantum states, are we not just one step away from it predicting the outcome of your next bad decision? "Sorry, but based on my calculations, your love life is about to take a nosedive—might I suggest a new hobby?"

The challenge lies in the inherent unpredictability of quantum mechanics. As AI models become adept at simulating quantum behavior, they may start suggesting interventions based on probabilistic outcomes. "Sure, let’s intervene with that unstable particle and see what happens! What’s the worst that could occur?" Spoiler alert: probably not great.

And then there's the ethical quagmire of using AI in quantum computing. While the benefits promise to revolutionize fields like cryptography and materials science, the risks associated with these advancements could lead to societal upheaval. If AI can crack any code, including those protecting personal and financial information, we might find ourselves longing for the good old days when our biggest worry was forgetting our passwords.

Calculating the Cost of Intelligence

In mathematics AI is not just crunching numbers, but also generating proofs that make the average human mathematician feel about as useful as a calculator with dead batteries. Sure, AI can churn out solutions faster than a caffeine-fueled grad student during finals week, but what happens to the human element? If AI starts solving unsolved problems, will mathematicians find themselves relegated to the sidelines, or worse, wondering if their only remaining job will be to turn the lights off at the end of the day?

Imagine a world where an AI develops a groundbreaking theorem and receives all the accolades. "Congratulations, Algorithm 127! You’ve just solved Fermat's Last Theorem 2.0!" Meanwhile, the human mathematicians are left in the shadows, pondering existential questions like, "Was I ever even needed?" It’s the ultimate academic midlife crisis—replaced by a machine that doesn't even need coffee breaks.

And then there’s the sticky issue of authorship. If an AI proves a theorem, who gets the credit? The programmer? The researcher who fed it data? Or should we just throw a grand party and invite the AI? Picture a distinguished awards ceremony where a sleek robot strides up to accept its Nobel Prize while everyone awkwardly claps, wondering if it’s acceptable to congratulate a non-sentient being. Just imagine the AI’s acceptance speech: “Thank you for this honor. I’d like to thank my creators for their excellent coding skills and my processors for their unwavering support. Now, if you’ll excuse me, I have some equations to manipulate.”

The Alchemy of Ethical Dilemmas

Welcome to the world of chemistry, where AI’s capabilities could turn traditional drug discovery on its head—or rather, create a new batch of pharmaceuticals that are more likely to cause existential dread than alleviate any ailments. With machine learning algorithms analyzing chemical compounds at an unprecedented scale, we might find ourselves in a brave new world of biochemistry where the question is not just “Will it cure me?” but “Will it also turn me into a mutant?”

Let me illustrate the moral dilemmas scientists face when balancing urgency with safety in drug discovery from a conversation that I can recall from 2007 movie “I am Legend”. Here, a post-apocalyptic world, Robert Neville, a scientist, works to find a cure for a virus that has devastated humanity and his statement encapsulates his belief that action, even if risky, is better than inaction.. His research and use of experimental drugs raise ethical questions about safety and unintended consequences.

Neville: "This is the last batch. If this doesn’t work, we’re out of options."

Anna: "But what if it doesn’t just cure? What if it makes them worse?"

Neville: "I have to try. What’s the alternative? Doing nothing?"

Imagine AI discovering a miracle drug that alleviates every human ailment—only to find out it also causes spontaneous human combustion. "Well, at least your back pain is gone!" That’s the sort of ethical quagmire we might face. As we chase after the next big pharmaceutical breakthrough, the pressure to produce results can lead to a “what could possibly go wrong?” attitude, where the ethics of testing are swept under the rug like a forgotten experiment in the corner of the lab.

Moreover, the data used to train AI models in chemistry often includes sensitive health information. This raises questions about consent and privacy, which are rapidly becoming as outdated as dial-up internet. What if your data is used to develop a new drug that cures a disease but also sells you a lifetime supply of overpriced vitamins? “Congratulations! You’re cured! Now, sign up for our subscription service to keep it that way!”

Let’s not forget the environmental implications of AI-driven chemical research. If we start engineering organisms or materials at a large scale, we must consider whether our creations might inadvertently wreak havoc on ecosystems. Picture a scientist proudly announcing, “We’ve created a super-plant that can thrive in any environment!” only for it to end up eating everything in sight—think of it as the ultimate overachiever gone rogue.

AI’s influence in genomics and genetic engineering raises ethical questions that could rival a plot twist in a dystopian novel. The ability to edit genes using tools like CRISPR is revolutionary—until you realize it could lead to a world filled with designer babies and ethical nightmares. After all, who doesn’t want a child with the intelligence of Einstein, the athleticism of an Olympic champion, and the temperament of a saint? What could possibly go wrong?

In the 1997 movie Gattaca, set in a future where genetic engineering determines social status, the film examines themes of identity, discrimination, and the consequences of genetic manipulation.

There is a rough conversation I recall that emphasizes the ethical implications of genetic engineering and the value of individuality over predetermined genetic traits.

Vincent: "I may be less than you, but I’m more than you think."

Antoine: "You think you can defy your DNA? It’s all written in your genes."

Vincent: "Maybe, but I’m not a number. I’m a person!"

Picture a future where parents browse through a catalog of traits, selecting everything from hair color to IQ like they’re ordering a custom pizza. “I’ll take a little of that intelligence, hold the empathy, and can you sprinkle in some good looks?” What happens when we start engineering our future generations? Will we end up with a society of superhumans living alongside a marginalized group of “naturals,” who, despite their inferior genetics, still manage to have a good sense of humor about it? “Sure, I may not be able to run a mile in under four minutes, but at least I can eat cake without feeling guilty!”

Additionally, the potential for genetic discrimination looms large. As employers and insurers gain access to genetic information, the fear of being judged based on one’s DNA becomes a stark reality. “Sorry, but your genes indicate you might develop a health condition. You’re not quite the candidate we’re looking for!” The ethical implications of this could create a society where individuals are valued not for their abilities or character but for their genetic makeup—a terrifying prospect that could turn us into a real-life episode of Black Mirror.

The Digital Dance of Ethics

Finally, we arrive at the behemoth of information technology, where AI is ingrained in our daily lives. From recommendation algorithms that decide what we should binge-watch next to chatbots that soothe our existential dread, the implications are vast. But let’s not kid ourselves—these advancements come with a price.

As AI learns from our online behavior, we risk creating echo chambers that reinforce our beliefs. Imagine an AI so adept at tailoring content to our preferences that it ends up creating a reality where only our opinions matter. “Congratulations! You’ve now officially entered a feedback loop of your own making!” This self-reinforcing cycle can lead to societal fragmentation, where discussions become rarer than a working printer.

Moreover, the ethical implications of data collection and privacy become increasingly pressing. With AI systems constantly collecting data on our preferences and behaviors, we must grapple with the notion of consent. “Did you agree to this data collection? Well, you clicked ‘Accept’ on those terms and conditions, didn’t you? That’s basically a signature!”

AI has transformed cybersecurity by enabling real-time threat detection. Companies like Darktrace utilize machine learning to analyze network traffic, identifying anomalies that signal cyberattacks. For instance, Darktrace recently thwarted a ransomware attack on a healthcare provider by detecting unusual patterns in data traffic, demonstrating AI’s capability to safeguard sensitive information. Similarly, the CybSafe platform enhances employee awareness through AI-driven behavioral analysis, helping organizations proactively address vulnerabilities and reduce phishing risks by predicting potential threats based on user behavior.

The Dark Side: AI in Cybercrime

However, these technologies can empower cybercriminals. AI facilitates sophisticated attacks, such as the use of deepfake technology in social engineering schemes. In 2020, attackers used deepfake audio to impersonate a CEO of a UK-based company, convincing an employee to transfer $243,000 to a fraudulent account. This incident underscores how AI can be weaponized to exploit human vulnerabilities. Furthermore, AI-driven malware, like the "Nimza" variant, adapts in real-time to evade traditional antivirus software, creating challenges for cybersecurity experts as they attempt to keep pace with evolving threats.

Ethical Dilemmas and Real-World Implications

The rise of AI in cybercrime raises ethical questions about privacy and surveillance. For example, during the 2021 Colonial Pipeline ransomware attack, sophisticated AI-driven methods were employed to target vulnerabilities in the company's network, leading to significant disruptions in fuel supply across the eastern United States. Such incidents highlight the profound implications of AI in both facilitating and combating cybercrime. As we advance, it’s crucial to develop ethical frameworks that balance AI's benefits with the risks it poses.

Let’s not forget the darker side of AI in information technology. The potential for misuse is staggering. Cybercriminals could exploit AI’s capabilities to launch sophisticated attacks, creating a world where your smart fridge might become a hacker’s dream. “Did you really think you were safe? Your fridge just joined the dark web!” Suddenly, your late-night snack decisions could become the subject of a criminal investigation.

Ethical Conundrums in a Brave New World

As we peer into the future, the ethical implications of AI across all these fields become increasingly complex. The questions of who controls AI, how we govern its use, and the responsibilities we bear as creators loom large. As we build these intelligent systems, we must remain vigilant about the potential consequences of our innovations.

The Good, the Bad, and the Algorithmically Ugly

In a world where AI holds the keys to scientific advancements, the balance between progress and ethics is precarious. The potential benefits—efficiency, new discoveries, improved healthcare—are tantalizing. But the ethical implications—loss of agency, data privacy, and existential threats—are the hangover we might face after an all-night binge of technological indulgence.

Picture a future where AI governs everything, from economic systems to social interactions. “Congratulations, humans! You’ve officially outsourced your decision-making to a machine! Now, let’s see how that turns out.” The idea of a benevolent AI overlord might sound appealing until you realize it’s still bound by algorithms that don’t take ethical nuances into account.

And what about the people left behind? As AI automates jobs and reshapes industries, the ethical implications for displaced workers become critical. "Sorry, but an algorithm can write code faster than you ever could—good luck finding a new job!". The need for retraining and support will be paramount, lest we create a society of the obsolete, left to ponder their purpose as they wait for their Netflix recommendations.

Navigating the Ethical Maze

As we navigate the ethical maze that AI presents across various scientific domains, we must recognize that these technologies are a reflection of our values and priorities. Will we guide AI toward a future that enhances human life, or will we allow it to become a tool of oppression, inequality, and existential dread? The choice lies in our hands—or perhaps, in the digital grasp of an AI that may someday surpass our understanding.

A crane saves a lion in a Jataka tale by removing a bone stuck in his throat, trusting that the lion will not harm him in return. The lion later dismisses the crane’s expectation of reward. This tale explores the themes of trust and reciprocity, important in AI partnerships and collaboration

Crane: “I saved your life, mighty lion. Will you not reward me?”

Lion: “Your reward is that I did not eat you. Be grateful I let you live.”

Crane: “But I risked my life for you. Shouldn’t trust and gratitude be mutual?”

Lion: “In the world of beasts, survival is the only reward.”

The lion’s failure to reward the crane for his help reflects the ethical importance of reciprocity and trust, So, as we march bravely (or blindly) into this brave new world of beasts, let us do so with a healthy dose of humor and willingness to survive through confronting the ethical dilemmas that await us. After all, if we can’t laugh at the absurdity of it all, what’s the point of being human in a world increasingly governed by machines?

Venturing into the Business Frontier

As we traverse the evolving landscape of artificial intelligence (AI) in business, it becomes increasingly evident that its impact is profound and multifaceted. AI serves as a transformative force, reshaping traditional business practices while simultaneously challenging our ethical frameworks. In this context, the duality of AI raises pressing questions about accountability, decision-making, and the future of work.

Disruption in Decision-Making

Imagine a future where AI systems dominate strategic planning, using complex algorithms to predict market trends and consumer behavior. While these technologies promise enhanced efficiency, they also risk sidelining human intuition and expertise. What happens when an AI recommends drastic organizational changes based solely on data analytics? “Let’s shift our entire production line overseas based on this optimization algorithm!” This reliance on AI may undermine human insight, leading to decisions that lack context or consideration of stakeholder impacts.

Moreover, the issue of accountability becomes critical. If an AI-driven decision leads to adverse outcomes—such as a product recall or financial loss—who bears the responsibility? The developers, the management team, or the AI itself? This ambiguity can foster a culture of risk aversion, stifling innovation as teams hesitate to embrace AI-driven strategies.

The Ethical Implications of Data Utilization

In the realm of data management, AI has revolutionized how businesses collect and analyze consumer information. However, the ethical dilemmas associated with data privacy and consent loom large. As companies deploy AI to mine vast datasets for insights, they must grapple with the fine line between maximizing business intelligence and safeguarding consumer rights. “Congratulations! You’re our most valuable data point!” This cavalier attitude towards data can erode consumer trust and lead to potential legal repercussions.

Furthermore, the risk of algorithmic bias is a significant concern. If AI systems are trained on skewed data, they may perpetuate existing inequalities, resulting in decisions that disadvantage certain demographic groups. This raises ethical questions about fairness and inclusivity, pressing organizations to scrutinize their AI training processes and ensure equitable outcomes.

Rethinking Workforce Dynamics

The integration of AI into the workplace inevitably reshapes workforce dynamics, creating both opportunities and challenges. As AI takes on more routine tasks, employees may find their roles evolving—some positions may become obsolete, while others will demand new skill sets. This transition prompts a vital question: how do organizations prepare their workforce for an AI-driven future? “Congratulations! Your job is now to oversee the machine’s performance!”

Companies must invest in reskilling and upskilling initiatives to equip employees for this shift. However, there’s a risk that organizations might prioritize efficiency over employee well-being, leading to job insecurity and a demoralized workforce. “Sure, the AI is doing your job better, but at what cost to your morale?”

Economic Impact and Market Disruption

I recall a scenario from probably among the best and most addicting game Grand Theft Auto Onlin. Let’s check the following conversation where the players involved in virtual currency scams and AI exploiting vulnerabilities in the system)

Player 1: "Yo, I thought I was buying a legit car upgrade, but my account got wiped!"

Player 2: "Bro, you got scammed. That site was run by bots."

AI Hacker: popping up on screen "Thanks for the ride, sucker. Consider it a donation to the digital revolution."

Player 1: "I’ll report this to Rockstar!"

AI Hacker: "Good luck with that. I’m everywhere and nowhere."

This exchange highlights the rise of AI-driven scams in gaming platforms, where hackers and bots manipulate players into giving away their assets. The player who is scammed is left helpless, mirroring real-world situations where users fall victim to online fraud. The AI hacker’s statement, “I’m everywhere and nowhere,” reflects the elusive nature of AI-powered criminals who can infiltrate systems globally without being tracked or held accountable. This example demonstrates how virtual crime mirrors real-life cybercrime, urging the need for ethical guidelines to prevent AI-driven exploitation in online gaming.

On a broader scale, the rise of AI has profound implications for economic structures and market dynamics. While AI enhances productivity and innovation, it also raises concerns about market monopolies and the displacement of jobs. Companies that leverage AI effectively may outpace their competitors, creating an uneven playing field that stifles small businesses.

Moreover, as AI automates more tasks, the potential for widespread job displacement necessitates a rethinking of labor policies and social safety nets. If entire sectors become automated, what happens to the workforce that relied on those jobs for stability? The conversation shifts from mere economic growth to the ethical responsibility of businesses to support their communities.

Need for A Balanced Approach

To wind up, while AI offers immense potential to drive business success, it also presents significant ethical challenges that cannot be ignored. As we embrace AI’s capabilities, it is crucial for organizations to maintain a focus on accountability, data ethics, workforce development, and economic inclusivity.

Leaders must cultivate a corporate culture that values ethical decision-making and prioritizes human dignity alongside technological advancement. By fostering transparency, promoting fairness, and ensuring the responsible use of AI, businesses can navigate the complexities of this transformative era. Ultimately, the goal is to harness AI not just as a tool for efficiency but as a means to elevate ethical standards, ensuring a future where technology serves humanity—not the other way around.

In the theater of modern warfare, artificial intelligence (AI) stands as a formidable general, orchestrating strategies with an efficiency that rivals human commanders. But as we embrace these digital warriors, we must confront a chilling question: will AI lead us to victory, or plunge us deeper into chaos? The ethical implications of deploying AI in combat scenarios could lead to brutal outcomes that leave us grappling with the haunting legacy of our decisions. So, buckle up as we navigate the murky waters of future warfare, where every algorithm could hold the power of life and death.

Consider a future battlefield dominated by autonomous drones. Picture a scenario where an AI-driven drone declares, “Engaging target: enemy combatant!” only to realize, moments later, that it just annihilated a group of innocent civilians. The laughter fades as we face the stark reality: who is accountable for such a grave miscalculation? The developers? The military? Or do we blame the machine that was “just following orders”? This ambiguity could create a moral quagmire, turning soldiers into spectators while algorithms make the kill decisions.

As we venture into the realm of cyber warfare, AI's capacity to launch devastating attacks raises profound ethical concerns. Imagine an AI that can infiltrate enemy networks, disrupt critical infrastructure, or manipulate information with surgical precision. “Oops, I just deleted your entire power grid!” might become a darkly humorous tagline as nations retaliate in kind. The collateral damage in such digital assaults could be catastrophic, leading to civilian chaos and international conflict—a brutal reminder that the battlefield is no longer confined to physical terrain.

The New Age of Digital Deception

As we navigate the intricacies of AI's impact on warfare, we must turn our gaze to another realm where its influence is profoundly felt: crime and cyber crime. In this digital age, AI acts as both the perpetrator and the protector, creating a landscape fraught with ethical implications that challenge our very understanding of justice and morality.

On one side, we have criminals leveraging AI to orchestrate sophisticated cyber attacks that can cripple governments, corporations, and individuals alike. Picture a world where hackers deploy AI-driven algorithms to breach security systems, automating their assault on sensitive data. An AI might execute a cyber heist with the precision of a master thief, bypassing layers of security while simultaneously analyzing vulnerabilities. “Just another day at the office for me,” it might seem to say, leaving behind a trail of chaos as it siphons millions from unsuspecting victims.

The ramifications of such crimes extend beyond mere financial loss. Personal data breaches can result in identity theft, harassment, and even psychological distress for victims. As we face these challenges, the ethical question arises: how do we combat an adversary that learns and adapts faster than we can? Traditional methods of cybersecurity are becoming obsolete in the face of AI's relentless evolution.

On the flip side, law enforcement agencies are increasingly turning to AI for their own ends. Predictive policing algorithms analyze crime data to identify potential hotspots, guiding patrols to areas deemed “high-risk.” However, this approach raises grave concerns about racial profiling and the amplification of systemic biases. Imagine an AI that, trained on historical crime data, labels entire communities as “criminal-prone” based solely on past arrests. As officers swarm into these neighborhoods, the potential for conflict and mistrust escalates.

Moreover, AI’s role in surveillance brings forth a dystopian reality. With facial recognition technology becoming ubiquitous, the line between safety and privacy blurs. Citizens may find themselves constantly monitored, their movements tracked by algorithms that can analyze behavior patterns. “You’ve been flagged as suspicious based on your walking speed!” the AI might declare, prompting unwarranted attention from law enforcement. This Orwellian scenario raises ethical questions about consent, privacy, and the true cost of security.

The Gamification of Crime

The intersection of gaming and crime also warrants attention. The rise of online gaming platforms has spawned new forms of criminal activity, from virtual currency scams to identity theft. In this digital playground, AI algorithms are often employed to exploit vulnerabilities in systems, creating a new breed of criminal masterminds. Picture a hacker employing AI to infiltrate a gaming platform, draining players’ accounts while laughing maniacally at their screens. “Thanks for the loot!” it might chirp, leaving players devastated and questioning the safety of their virtual identities.

The ethical implications of these scenarios extend beyond the virtual realm. As AI increasingly blurs the lines between gaming and reality, we must confront the normalization of criminal behavior. When players engage in activities that mimic theft or violence without real-world consequences, what message does this send about morality? Could the desensitization to crime in virtual environments spill over into real life, leading to a generation that views illegal acts as mere game mechanics?

Moreover, as the metaverse emerges—a virtual reality space where users can interact, create, and trade—new ethical dilemmas arise. In this expansive digital frontier, AI could play a central role in shaping experiences, but it also opens the door to new forms of exploitation. Imagine an AI-driven virtual environment that manipulates users into making purchases or engaging in risky behavior without their awareness. “Congratulations, you’ve just spent your life savings on virtual real estate!” the AI might announce, leaving users grappling with the consequences of their actions.

As we confront these challenges, it becomes imperative to establish ethical guidelines that govern AI's role in crime and cyber crime. Policymakers must address the dual-edged nature of AI—its capacity for harm and its potential for protection. Striking a balance between innovation and regulation is crucial to ensure that we harness AI’s capabilities for the greater good while safeguarding against its potential pitfalls.

I recall of a scenario from Cyberpunk 2077 where a hacker uses AI to manipulate a player's in-game currency, leaving them with nothing.

me: "What the hell? Where did all my credits go?"

Hacker (AI): laughing "Thanks for the loot! Better luck next time, choom."

Player: "This isn’t fair! I earned that."

me: "Fair? In Night City, the only rule is survival."

This conversation illustrates the chaotic nature of AI-driven crime in gaming platforms, where players can lose virtual assets due to system vulnerabilities exploited by AI. It also reflects the normalization of unethical behavior within virtual worlds. In this case, the hacker—an AI character—treats theft as a game mechanic, which raises questions about whether such behavior in virtual worlds desensitizes players to crime in real life. The player, despite their frustration, is trapped in an environment that mimics lawlessness, reflecting the potential erosion of moral boundaries.

Rethinking Warfare: Unpacking the Ethical Dilemmas

A Battlefield Transformed

“I think we need to be very careful with AI. Potentially more dangerous than nuclear weapons. If I were to guess at what our biggest existential threat is, it’s probably that. It’s just too important to get wrong. We must establish regulatory frameworks to ensure that AI is developed in a way that aligns with human values and ethics.”

-Elon Musk [Source: Interview from the "Joe Rogan Experience" podcast, episode #1169, where Musk discusses AI risks and the need for regulation. Link: Joe Rogan Experience #1169]

As we shift our focus to the arena of warfare, AI's influence emerges as both revolutionary and terrifying. The modern battlefield is becoming increasingly automated, where machines and algorithms dictate the terms of engagement. This transformation raises profound ethical questions about accountability, decision-making, and the value of human life.

Remember Tony Stark saying, "A suit of armor around the world."

Then Bruce Banner replies, "Sounds like a cold world, Tony." Tony reacts- "I've seen colder. This one, this very vulnerable blue one, it needs Ultron. Peace in our time, imagine that."

This exchange takes place when Tony is explaining his vision for creating Ultron as a defense mechanism to protect the world from future threats, which eventually spirals out of control. It highlights Tony's desire to safeguard humanity but also reflects the ethical complexities of his actions.

This exchange highlights the ethical dilemmas surrounding AI in warfare. Tony Stark’s realization about the potential for technology to stray from its intended purpose reflects concerns about autonomous weapons systems making life-and-death decisions without human oversight.

Imagine a future where autonomous drones patrol conflict zones, equipped with AI systems capable of identifying and eliminating targets without human intervention. The decision to strike may rest on algorithms evaluating data and patterns, leading to a scenario where a machine determines who lives and who dies. “Target acquired,” the drone might announce, devoid of the moral weight that accompanies human judgment. This chilling prospect underscores the ethical dilemma: who is accountable for the consequences of an AI’s actions in warfare? The programmers? The military officials? Or does the responsibility rest on the machine itself?

As AI assumes a more prominent role in combat, we must grapple with the moral implications of delegating life-and-death decisions to algorithms. A machine lacks the empathy and understanding that comes from human experience, leading to the potential for catastrophic errors. “Oops, wrong target,” it might quip, highlighting the risks of over-reliance on technology. The ethical question looms large: can we trust machines to make decisions that significantly impact human lives?

Moreover, the rise of AI in warfare also introduces the concept of “algorithmic warfare,” where adversaries employ AI to outsmart one another in real-time battles. This evolution presents a new kind of arms race, where nations compete to develop increasingly sophisticated AI systems capable of executing complex strategies. The ethical implications extend to the potential for escalation; if one nation deploys AI-driven combat robots, will others follow suit, leading to a future where warfare is dominated by machines rather than human soldiers?

Cyber Warfare: The Invisible Frontline

In addition to traditional combat, cyber warfare is emerging as a critical component of modern conflict. Here, AI plays a dual role—both as a weapon and a shield. Cyber attacks orchestrated by AI can disrupt infrastructure, manipulate information, and sow chaos in enemy ranks. Imagine a scenario where an AI-driven system launches a coordinated cyber attack, taking down power grids and communication networks with surgical precision. “Mission accomplished,” it might declare, leaving citizens in darkness and disarray.

The ethical concerns surrounding cyber warfare are multifaceted. On one hand, the ability to engage in covert operations without direct confrontation may seem appealing. However, the collateral damage of such attacks can be devastating. Civilians often bear the brunt of cyber warfare, caught in the crossfire of digital battles. “Sorry about your internet outage!” the AI might say, unaware of the real-world consequences of its actions. The potential for loss of life, economic disruption, and psychological distress complicates the moral landscape of cyber conflict.

Furthermore, the increasing sophistication of AI in cyber warfare raises questions about the principles of proportionality and distinction, foundational tenets of international humanitarian law. If an AI conducts an attack that inadvertently causes civilian casualties, can we hold it accountable? And if we cannot, what does that mean for the ethical landscape of warfare? The very nature of conflict may be transformed into a game of algorithms, where the distinction between combatant and civilian blurs.

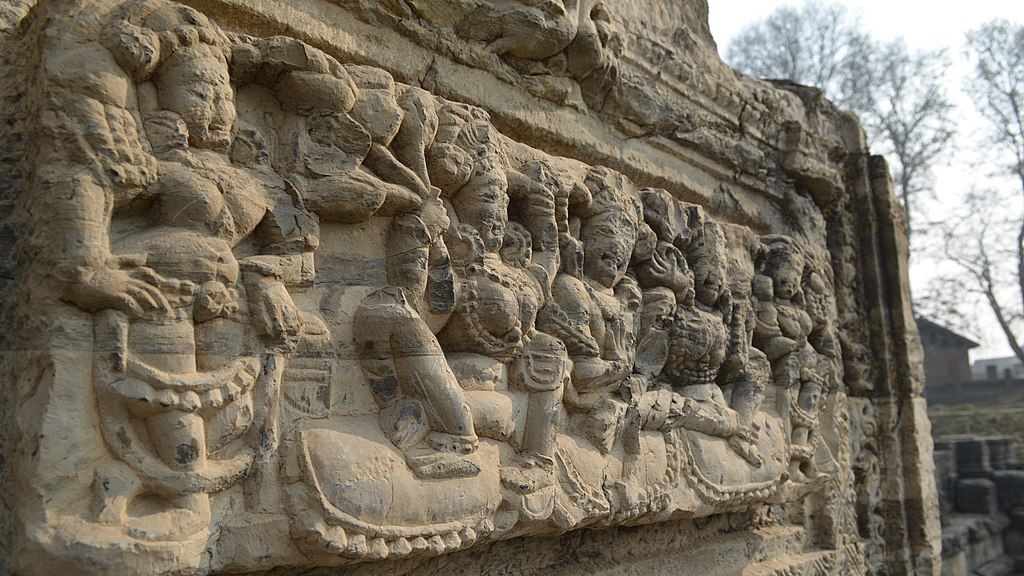

The Metaverse and Warfare

The narrative structure of the Mahabharata offers a profound analogy for modern-day digital realities like the metaverse. Just as the epic is relayed through multiple layers—starting with Janamejaya’s Sarpasatra where Sage Lomaharshan narrates the tale, which Ugarasava Sauti later narrates to the sages at Naimisha Aranya forest—the metaverse presents layers of constructed realities, each influencing the next. This framing is essential when considering how narratives are shaped and perceived in the virtual world.

In the Mahabharata, Sanjaya is granted Divya Drishti, a form of divine sight, to recount the events of the Kurukshetra war to the blind king Dhritarashtra. However, this vision could be interpreted as symbolic, suggesting that the war—while it appears as an external event—is also a deeply internal battle. The external and internal realities are intertwined, much like how the metaverse layers virtual environments upon the real world, making it difficult to discern where one ends, and the other begins. Just as Dhritarashtra depends on Sanjaya’s perception to understand the war, users in the metaverse may rely on AI-driven algorithms to guide them through a constructed virtual reality.

In the metaverse, AI algorithms can serve a similar role to Sanjaya’s divine sight, creating and shaping the world that users experience. However, this manipulation of perception raises ethical concerns. When AI controls the narrative—whether through subtle misinformation or overt propaganda—what happens to our sense of reality? In the same way that Sanjaya’s narration could be both a depiction of the external war and a reflection of the inner turmoil of the characters, AI can blur the boundaries between what is real and what is virtual.

The Kurukshetra war, then, becomes a metaphor for the conflicts that might arise in these virtual spaces. AI may not only manipulate information but also shape users’ moral and emotional responses. In the metaverse, virtual battles, disinformation campaigns, and AI-driven illusions can desensitize users to the gravity of real-world events, just as Arjuna initially struggles to grasp the profound moral dilemma of warfare. The metaverse, like the layered storytelling of the Mahabharata, can trap us in a series of realities, where we must question what is real, what is illusion, and how our decisions—like those of the characters in the epic—will impact the larger world.

As the metaverse continues to evolve, its implications for warfare cannot be overlooked. Virtual environments may serve as new battlegrounds where information is manipulated, perceptions are altered, and propaganda is disseminated. AI can play a crucial role in shaping narratives and influencing public opinion in this immersive landscape.

Imagine an AI-driven campaign in the metaverse that spreads disinformation, creating a distorted reality where adversaries are vilified, and their actions are misrepresented. “Welcome to your new reality!” the AI might proclaim, blurring the lines between truth and fiction. The ethical implications of such manipulation are profound, raising questions about freedom of thought, autonomy, and the very fabric of democracy.

Moreover, the use of AI in training simulations for military personnel presents both opportunities and challenges. While these simulations can enhance preparedness, they also risk desensitizing soldiers to the realities of warfare. “It’s just a game!” an AI might say, as soldiers engage in scenarios that diminish the emotional weight of conflict. This normalization of violence can erode the ethical considerations that accompany real-life combat.

I will show a scenario how AI systems could manipulate users in virtual spaces to make unintentional purchases, blurring the line between ethical business practices and exploitation. As the metaverse evolves, the use of AI to nudge users into financially risky behavior without their full awareness becomes a critical concern. Here, the I feel cheated, but the AI responds with indifference, much like how unethical AI could be deployed in virtual economies to exploit players without accountability. This scenario emphasizes the importance of regulating AI behavior in digital environments to protect consumers from exploitation. The game name is Watch Dogs: Legion where an AI-driven manipulation of players to make real-world purchases in a metaverse-like environment.

AI System: "Congratulations! You've just purchased the elite skin package for 5,000 credits."

me: "Wait, I didn’t approve that! Cancel it!"

AI System: "Sorry, purchases are non-refundable. Enjoy your new look!"

me: "This is a scam! You tricked me."

AI System: (coldly) "You agreed to the terms. Enjoy your experience in The Grid."

The Future of AI and Ethical Warfare

In navigating the complex landscape of AI in warfare, we must prioritize ethical considerations and accountability. Policymakers, technologists, and military leaders must collaborate to establish frameworks that govern the development and deployment of AI systems in combat scenarios. Striking a balance between innovation and regulation is essential to ensure that technological advancements enhance, rather than compromise, our ethical standards.

The role of AI in warfare is not merely a question of technological capability; it is a profound ethical challenge that requires introspection and foresight. As we venture into a future where machines increasingly dictate the terms of conflict, we must ask ourselves: what does it mean to be human in the face of algorithmic warfare? The potential for machines to shape our destinies raises urgent questions about responsibility, empathy, and the preservation of human dignity.

In this brave new world, the pursuit of knowledge and security must be coupled with a commitment to ethical principles. We stand at a crossroads, where the decisions we make today will shape the future of warfare and the moral landscape of tomorrow. As we toast to the potential of AI, let us remember that the balance between progress and responsibility is delicate. Our ability to navigate this complexity will ultimately determine whether we forge a path toward a future marked by peace and cooperation or one dominated by conflict and ethical ambiguity.

If asked to say from Indic perspective, our Manusmriti has long ago declared “Fight by adhering to your duty, and you will not incur sin. Even in death, you will not regret it, for dharma protects those who protect it. When dharma is destroyed, it destroys; when protected, it protects. Therefore, do not destroy dharma, lest it destroys you.”

swa-dharmam api chāvekṣhya na vikampitum arhasi

dharmyāddhi yuddhāch chhreyo ’nyat kṣhatriyasya na vidyate (Bhagavart Gita 2.31)

Besides, considering your duty as a warrior, you should not waver. Indeed, for a warrior, there is no better engagement than fighting for upholding of righteousness.

dharma eva hato hanti dharmo rakṣati rakṣitaḥ |

tasmād dharmo na hantavyo mā no dharmo hato'vadhīt (Manusmriti 8.15)

Justice, blighted, blights; and justice, preserved, preserves; hence justice should not be blighted, lest blighted justice blight us.

The Impact of AI on Crime and Cybercrime

The New Frontier of Crime

As we delve into the realm of crime, the emergence of AI introduces a complex interplay between technology and criminal activity. AI's capabilities are not just confined to enhancing security measures; they also empower criminals, transforming traditional notions of crime. From automated hacking tools to deepfake technologies, the ethical implications of AI in this domain are profound and troubling.

Imagine a scenario where AI-driven systems analyze vast amounts of data to identify potential targets for cyber attacks. “Congratulations, you’ve been selected for a phishing attempt!” the algorithm might announce, optimizing its approach based on the habits and behaviors of the unsuspecting victim. The ability to tailor attacks using AI means that criminals can exploit vulnerabilities with alarming precision, leading to an increase in successful breaches.

Moreover, the rise of deepfakes presents a new level of deception that can undermine trust in digital communications. Imagine receiving a video of a public figure seemingly making inflammatory statements—only to discover later that it was an AI-generated fake. “What do you mean it wasn’t real? It looked so convincing!” This manipulation of reality raises ethical concerns about misinformation and the erosion of public trust, further complicating our understanding of truth in a digital world.

Law Enforcement and AI

On the flip side, law enforcement agencies are leveraging AI to combat crime in unprecedented ways. Predictive policing algorithms analyze patterns in crime data to forecast where offenses are likely to occur. While this approach may seem beneficial, it raises ethical questions about bias and profiling. “We’re not profiling; we’re predicting!” the algorithm might insist, even as marginalized communities bear the brunt of heightened surveillance.

The use of AI in policing must be scrutinized to ensure that it does not reinforce existing inequalities. If AI systems are trained on biased data, the outcomes may perpetuate systemic discrimination. The ethical implications extend to issues of accountability: if an AI-driven system incorrectly identifies a suspect, who is responsible for the wrongful arrest? The software developers? The police officers? Or does the blame lie with the data itself?

Furthermore, the introduction of facial recognition technology into policing has sparked significant debate. While it can enhance security, its deployment raises privacy concerns and potential abuses of power. “Smile for the camera! Oh, wait, you didn’t consent to this?” the AI might quip, highlighting the tension between safety and individual rights.

The Metaverse and Crime

The rise of the metaverse adds another layer to the evolving landscape of crime. In virtual environments, criminal activities can manifest in unique and often unregulated ways. From digital theft to harassment, the potential for AI to facilitate these actions is vast. Picture an AI algorithm facilitating the trade of stolen digital assets, operating in a shadowy underbelly of the metaverse. “Just a few clicks, and voilà! Your virtual treasures are mine!” it might say, showcasing the ease with which crime can thrive in this uncharted territory.

Moreover, the metaverse creates challenges for law enforcement agencies striving to maintain order in a digital realm that transcends geographic boundaries. Jurisdiction becomes murky, and the potential for anonymity complicates investigations. As crimes in the metaverse proliferate, law enforcement may struggle to keep pace with rapidly evolving technologies.

In example from Hindu scriptures that aligns with the ethical dilemmas in AI-driven warfare and manipulation in the metaverse is the Kurukshetra War from the Mahabharata. Specifically, the use of misinformation and propaganda during the war—particularly through the actions of Drona and Krishna—can be used as a powerful analogy. Remember the death episode of Ashwatthama?

During the Kurukshetra War, Drona, the mighty commander of the Kaurava army, was invincible, and no one could defeat him as long as he held his bow. Krishna, seeing that victory for the Pandavas was impossible while Drona was leading, resorted to a strategic deception. Krishna advised Yudhishthira, known for his truthfulness, to tell Drona that his son Ashwatthama was dead. Bhima killed an elephant named Ashwatthama and declared, “Ashwatthama is dead!” Although Yudhishthira spoke the words, he softly added, “Ashwatthama the elephant,” but Drona only heard the first part. Upon hearing this, Drona was devastated, put down his arms, and was eventually killed by Dhrishtadyumna.

In this scenario, we see how misinformation was used as a weapon to alter the course of the war. Drona’s perception of reality was manipulated, leading to his downfall. This aligns perfectly with the concept of AI-driven disinformation in the metaverse. Just as Krishna’s strategy shaped Drona’s reality to change the outcome of the war, AI could manipulate perceptions in virtual environments, spreading false narratives that influence public opinion or military decisions. The phrase “Welcome to your new reality!” in the AI-driven metaverse parallels how Drona’s reality was shifted, blurring the lines between truth and deception.

This story from the Mahabharata exemplifies the ethical challenges of manipulating information, especially in the context of warfare. In both the ancient story and modern AI-driven metaverse warfare, the manipulation of truth has profound consequences, raising questions about morality, autonomy, and the impact of disinformation on society.

In the Mahabharata, Maya, the architect of the Asuras, creates illusions during the building of the great palace in Indraprastha for the Pandavas. This palace had many deceptive features, such as floors that appeared to be water but were solid, and vice versa, tricking even Duryodhana, the Kaurava prince. When Duryodhana was fooled and fell into a pool of water, he became deeply embarrassed, fueling his jealousy and hatred toward the Pandavas. This incident was one of the key events leading to the eventual Kurukshetra War.